Why is everyone suddenly trying to decode animal communication?

You may have seen the headlines “Machine learning attempts to decode sperm whales” and “The animals are talking. What does it mean?”.

Why now? And how? The answer is really pretty simple. Suddenly we have new and powerful computer tools, machine learning and artificial intelligence, that promise to be able to do things that the average human brain cannot. Sort of the superhero of decoding. And it’s true. Machine learning, deep learning, and artificial intelligence are rocking the biological world and one of human’s ongoing fascinations is talking to animals, or at least decoding their signals to see if they have language. Let’s take a look at how we got here, where we are going, and the challenging steps along the way.

Artificial Intelligence, or AI, has been rapidly evolving and entering our daily lives for a decade. We have Siri, Alexa, Google searchers, and Facebook, all based on mountains of human-derived data to look for patterns. And although there is no magic program that lets us through in data and get a clean result, we are getting close.

Machine Learning refers to software usually with various algorithms, neural nets, and deep learning techniques that do the heavy lifting. There is Supervised Learning – where the expert gives direction and feedback with results to improve the computer’s functioning. There is Unsupervised Learning – where we just throw in data and see what comes out. There is also a hybrid version where you might at least tell the computer “this is junk and ignore it, but please categorize the rest”.

Along with Dr. Thad Starner and colleagues from Georgia Institute of Technology we have been trying to decode communication signals from our dolphins in two ways:

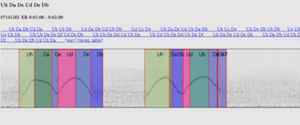

1.) Using Machine Learning we have built models of their sounds and a user interface to let us dig into our data and extract patterns. At first this started as a dissertation for Daniel Kohlsdorf a PhD student at Georgia Tech. Later, it evolved into a 3-year grant from the Templeton World Charity Foundation, under the program called “Diverse Intelligence”. This grant really jump-started our work and helped us focus on decoding dolphin vocal sequences.

For further reading about the Templeton World Charity Foundation’s Diverse Intelligence Program, please visit templetonworldcharity.org/projects-database/0468

2.) C.H.A.T. (Cetacean Hearing Augmentation Technology) is an underwater computer capable of playing and receiving sounds. Since it is meant to be a simple system for human/dolphin communication, it also incorporates real-time sound recognition (using Machine Learning algorithms) to help identify vocalizations in the water. The first version of CHAT (CHAT SENIOR) was a large chest-worn aluminum housing which worked well but was a bit bulky and also required a tech team to keep it running. Since that version we have gone modular, creating an arm-worn module that plays sounds (CHAT LITE), and a chest-worn smart phone that both receives and translates sounds (CHAT JUNIOR) for the researcher in the water. Finally, we have been able to use off-the-shelf hardware, smartphones, to train for this system. Much more user-friendly, CHAT LITE and CHAT JUNIOR give us the ability to spend much more time in the water with the dolphins, and require less of a tech team to be onboard all the time. Still dependent on machine learning for the system, at least the hardware has been reduced in size, making it easier for the researcher to swim with our streamline dolphins.

Both of these research projects require AI aspects to work. In our case, we use Semi-Supervised Learning since there are so many noisy signals underwater and the computer needs guidance to ignore them when helping us categorize the actual dolphin sounds. Who knows where this technology will lead us in the future.

-Dr. Denise Herzing

Research Director and Founder, WDP

Past posts references:

Scientific Publications:

From the Archives:

Support CHAT:

Media:

“How artificial intelligence could help us talk to animals” by Science News Explores